Blog

Earlier this week I had an interesting question from a group of executives in central Pennsylvania. We were discussing the implications what I call “the virtualization of America” and how much of our lives and work will take place in cyberspace by the end of the decade.

During the conversation one of the executives, in her mid-thirties, said that for a variety of reasons—privacy, human contact, security—she really wasn’t that comfortable with social networks and email and all of the other digital accoutrements steadily consuming our lives. She acknowledged that she was in the minority, but suggested that there will always be people who simply don’t want to engage with the virtual world. What, she wanted to know, are those people going to do? In the future, will you be able to have a life without the Internet?

Coincidentally, there’s also been quite a bit of chatter lately among the digerati on a similar theme. Dave Roberts, a popular blogger at the environmental site Grist, had announced that he was leaving the cybersphere, cold turkey, for a year—no blogging, no email, no tweets. He was burned out on virtuality.

At the same time the author of the influential legal blog Groklaw announced that she was shutting down her blog since, given recent revelations about US government surveillance, she could no longer offer her email correspondents and sources any hope of anonymity.

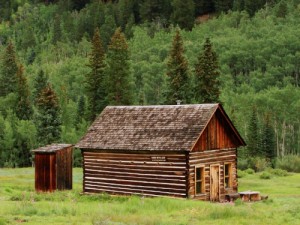

The desire to get “off the grid” is of course not new. In decades past, that meant detaching from civilization—generate your own power, grow your own food, dig wells, join the barter economy, etc. Just about every generation has a group that decides civilization is soon to end for one reason or another—Peak Oil, social collapse, the Year 2000—and heads for the hills. Some percentage decide after five or ten years that it’s hard work out in the wilderness, the apocalypse may not be quite so imminent and so they return to the world of pavement and petroleum.

Getting off the “virtual grid”, however, may be much more demanding. By the end of the decade, while it will still be possible to eschew all forms of electronic communication, it’s going to harder and harder to move through society without involving some chips and data. Everything from car and parking places to your electric meter and dishwasher will be connected to the Internet. Web-connected video cameras, perhaps running facial recognition software, will be everywhere. Already, even small businesses can hook up their existing video security systems to a cloud-based system that automatically reports customer demographics and movements. All I could tell the young manager in my meeting was that getting off the virtual grid will probably require even effort than in the past. Head for the hills or the desert, give up on the money economy, become entirely self-sufficient in food and energy, detach entirely from news of the outside world…

And then make sure that your new high-tech solar panels aren’t actually Web-enabled.

I was doing an interview this morning about the movie “Back to the Future II” and its predictions about 2015. There were some hits and some misses in that 1989 movie--and you’ll undoubtedly hear more about that as 2015 approaches. But perhaps the most glaring miss was the appearance of a phone booth as part of the 2015 plot.

The interviewer asked me: How could the writers have missed ubiquitous cellphones, such an obvious element of the future?

The interviewer asked me: How could the writers have missed ubiquitous cellphones, such an obvious element of the future?

Good question, and one I had already considered—because I’d published a near-future science fiction novel called Forbidden Sequence back in 1987, and I’d pretty much missed cellphones as well. Yet they’d been around since 1983, and Michael Douglas’ Gordon Gekko made them talismanic in the 1987 movie “Wall Street”.

So by 1989, the trend was unmistakeable. My most prized possession at the time was a Motorola MicroTAC phone--the first portable phone that wasn’t the size and shape of a man’s shoe. Well, it wasn’t exactly my possession. It cost about $3000--over $5000 in today’s dollars--and was on loan from Motorola since I was the technology writer for Newsweek. The mobile phone I could actually afford in 1989 was mounted in the armrest of my car and had a shoe-box sized transmitter in the trunk.

So I should have had cellphones on my horizon in 1987, and, Hollywood being Hollywood, the writers of Back to the Future II probably actually owned them in 1989. I suspect the reason we repressed mobile phones is that they really change narrative. Much of traditional dramatic plotting back then revolved around one character knowing something crucial and trying desperately to inform the others.

At that moment in history, introducing mobile phones to the storyline would have made plotting much more difficult. Within a few years, of course, mobile devices began to appear in stories and films and now constant and ubiquitous communication is the basic assumption.

And younger writers learn to use mobile devices as plot devices in themselves--see the final episode of season two of HBO’s “Girls”, where the entire soap opera finale with Hannah and Adam is dramatized through FaceTime.

It’s a great moment when Hannah accidentally turns on FaceTime and realizes that Adam has an iPhone. Even in the middle of an enormous emotional crisis, she blurts: “You have an iPhone?!” It’s an accurate reflection of how devices, increasingly, define us. As is always the case, technology may have taken away one kind of plotting trick but has given us plenty of new alternatives.

It’s a great moment when Hannah accidentally turns on FaceTime and realizes that Adam has an iPhone. Even in the middle of an enormous emotional crisis, she blurts: “You have an iPhone?!” It’s an accurate reflection of how devices, increasingly, define us. As is always the case, technology may have taken away one kind of plotting trick but has given us plenty of new alternatives.

Several hundred fast food workers have walked off their jobs at McDonalds, Burger Kings, Taco Bells, et al in New York City, where most earn the minimum wage of $7.25 an hour.

At the moment the media seems to be treating the walk-out as more of a novelty than anything else—the Times is reporting it on their City Room blog. Commenters around the Web are fairly universal in saying that challenging the fast-food giants isn’t going to work.

I’d suggest, though, that this could be a distant early signal of a labor trend that we may see increasingly as the decade goes on—indeed, a labor trend that may be necessary just to keep the American economy working.

I’d suggest, though, that this could be a distant early signal of a labor trend that we may see increasingly as the decade goes on—indeed, a labor trend that may be necessary just to keep the American economy working.

More and more of the new jobs we’re seeing in this recovery are service jobs. In larger cities, college grads compete with each other to land jobs folding shirts at J. Crew or steaming milk at Starbucks. Blue collar jobs are already being automated or outsourced, and low- and mid-level white collar jobs are next. In the long run, the functions that can’t be moved into cyberspace involve actually giving customers physical goods or providing hands-on services, like home health care attendants.

These are the jobs that will still be here in 2020. These are also, however, jobs that rarely produce either a living wage or a career path. In a sense they are like the factory jobs of the early 20th century, before unions and the American labor movement.

I’m acutely aware of all the optimistic arguments that say as old jobs are automated, new jobs appear—new jobs with higher wages that require more intellect, jobs that machines can’t do. But I’m not sure that’s always going to be true as we begin to automate more and more white-collar positions and put intelligent robots to work.

It’s generally assumed on both sides of the political aisle that a healthy, thriving middle class is crucial to the American economy. But that’s not how the future is shaping up.

So we may have a choice: do we increase the wages of service workers, as we did with factory workers a century ago, and give them a path into the  middle class? Or do we increasingly redistribute income via the government through measures like the earned income tax credit?

middle class? Or do we increasingly redistribute income via the government through measures like the earned income tax credit?

I think the first option—raising service industry wages—is a healthier alternative than a permanently shrinking middle class. But that will require employers to go along, and that’s not going to happen without things like fast food strikes.

On the other hand, it may already be too late for that. Behind the scenes even fast food automation is moving ahead quickly. By 2020 you may be ordering on an iPad and picking up your meal from a conveyor belt, with few low-wage service workers in sight.

I’ve been on the road this week speaking to some fairly traditional groups--lawyers, insurance executives--and I’m getting questions about Marissa Mayer’s dictum that canceled work-from-home arrangements for Yahoo workers:

“Listen, if a Silicon Valley outfit can’t make it work, then maybe this telecommuting thing just isn’t such a good idea.”

First, I explain that the Yahoo situation isn’t typical. Mayer inherited a company that, having lacked direction for years, probably doesn’t even know what all its people are doing in the first place. Temporarily herding them all into the office is likely a good way to sort it out. (Not to mention that new mother Mayer built a nursery next to her office, so the work-at-home issue is moot for her.)

First, I explain that the Yahoo situation isn’t typical. Mayer inherited a company that, having lacked direction for years, probably doesn’t even know what all its people are doing in the first place. Temporarily herding them all into the office is likely a good way to sort it out. (Not to mention that new mother Mayer built a nursery next to her office, so the work-at-home issue is moot for her.)

But then I emphasize that telecommuting itself is here to stay, and will grow only more important. We tend to forget that the US is still the fastest-growing developed nation on earth--now over 300 million, on our way to 400 million sometime in the early Forties. If you think it’s crowded out there now, just wait. Traffic congestion already adds one entire work week of sitting in the car to the average worker’s life each year, and that number keeps going up.

It’s going to be increasingly difficult to explain to young office workers why they have to get in the car and commute every day in order to sit in a cubicle and send emails and IM and do videoconferences. That will become even more of an issue for employers later in this decade as baby boomers finally retire and the competition for talented millennials really heats up.

Sure, there will always be good reasons for people to meet in person, although those occasions may diminish as telepresence systems get ever more “real” and the next generation of workers brings a new comfort with virtual work. But the need to meet in person every once in a while doesn’t mean that you have to move your entire workforce into the office every day.

The trend is utterly inevitable. And perhaps fifty years from now there will even be an online trivia competition in which one of the questions is:

“What was a rush hour?”

Give away what you used to sell, and sell what you used to give away.

It sounds like a zen parable, but it’s also something that more of my clients are pondering, as their business models move into the cybersphere.

My career started at Rolling Stone, so I naturally think of the music industry as an example. Years ago, if you were a rock and roll band, the way you made money was simple: you recorded an LP or CD, and when it was released, you went on tour to promote it.

You needed as much exposure as possible, so ticket prices were low and tours often didn’t make much money. You nearly gave the t-shirts away, because when you left Philadelphia you wanted to make sure that every kid in town had Led Zeppelin Summer 1976 displayed on their backs. Where you made your money was album sales, and everything else was marketing toward that end.

Now, for reasons ranging from online piracy to lower royalties for streaming services like Spotify, the value of recorded music has dropped precipitously. So some bands actually give away their music and instead make money on tours and selling merchandise (like those t-shirts). Baby boomers who haven’t been to a rock concert in a while are often stunned by $200 ticket prices and wonder: when did that start? Well, it started when it became clear that nobody was ever again going to get rich on CDs.

Something similar happened in journalism: in the early days of the Internet we found it impossible to charge for online news--but people were happy to pay $4 or $5 for a single article from the archives. Fresh news, it seemed, was supposed to be free, but once it was a few days old it was information and readers were willing to pay for it. (Explaining that to a grizzled old newspaper editor was a real challenge.) While more newspapers are now finally charging for news, some still use a business model wherein today’s news is free but you have to be a subscriber to see anything older than 24 hours.

Where else does this happen? One client used to make excellent money as a clearing house for government environmental records that were otherwise hard to access. But they recognized that sooner or later those records would be easily accessible online, so they turned their free Website into a for-pay community for environmental professionals. Lawyers, also, are increasingly mulling a future in which basic legal services may be either automated or out-sourced--so perhaps the real value they offer is the advice they give away for free during those client lunches and dinners. Lately I’ve even heard of corporate travel agencies who earn bonuses for NOT booking travel for employees but instead talking them into using telepresence.

Where else does this happen? One client used to make excellent money as a clearing house for government environmental records that were otherwise hard to access. But they recognized that sooner or later those records would be easily accessible online, so they turned their free Website into a for-pay community for environmental professionals. Lawyers, also, are increasingly mulling a future in which basic legal services may be either automated or out-sourced--so perhaps the real value they offer is the advice they give away for free during those client lunches and dinners. Lately I’ve even heard of corporate travel agencies who earn bonuses for NOT booking travel for employees but instead talking them into using telepresence.

Clearly one size doesn’t fit all--but it’s an interesting question for almost every intellectual property or services company to consider: Give away what you used to sell, and sell what you used to give away.

No matter what my speaking topic, the Q&A portion often turns into a discussion of Millennial behavior, either from the perspective of parents or employers. Did our parents spend this much time at professional meetings talking about us? Perhaps--but I also think that we're seeing not only traditional generational tut-tutting about the youngsters' strengths and shortcomings, but also a deeper kind of bewilderment about the impact of virtual communication and relationships.

Last week, at a major international consulting firm, I heard a story from the head of internships that combines two dominant themes.

This particular company runs an extensive multi-year internship program that begins with undergraduates. The selection process is so demanding that when a student lands an internship, she can be pretty sure that she's going to get a job after graduation.

In this case, the interns were invited to a weekend field trip in a large Eastern city, mixing with some of the company's higher-ranking officers. At one point, the whole group went from one venue to another via bus. One young woman found herself sitting next to the company's CFO on the bus. And she proceeded to spend the entire ride texting on her mobile phone.

Afterwards, the CFO went to the internship coordinator and said he was sorry, but someone who can't make small talk on a short bus ride just isn't going to work out at the firm.

At the end of the day the internship coordinator took the young woman aside and said that, regretfully, they were going to have to remove her from the program. And then the coordinator just had to ask: "What were you thinking? Sitting next to a senior offficer of the company and spending all your time texting?"

"I was texting my father," the girl explained. "To ask him what you should say to a CFO."

When I worked for Newsweek in the Eighties and Nineties I was always surprised by how much stature and respect the magazine received in Europe and Asia, given the fact that it was a) in English and b) had a relatively small circulation. But it was, of course, read by English-speaking power players (there always seemed to be multiple Rolex and Patek-Philippe ads), and the marketing was excellent: newsstands everywhere seemed to have Newsweek awnings.

In China the demise of the print edition was seen as a major watermark in American publishing. In several interviews there I tried to explain that there are many other print magazines still doing well--that Newsweek's need to drop the print edition was the result of some business and editorial missteps in addition to the changing publishing environment. I always expected that Newsweek would have a print edition at least through this decade. As time went on, it would become increasingly a luxury product for a diminishing audience that more or less collated the best of that week's Website, but it would not vanish quite so quickly.

My Chinese interviewers were genuinely surprised to hear that there are still many print magazines being started in the U.S. The real lesson of Newsweek print's demise is that there is no longer much margin for error in publishing on paper--whether you're an 80-year-old brandname or a freshly-minted start-up.

Here's one of the interviews from China Radio International: WMA MP3

I’ve long predicted that the real turning point on climate change action will be driven by extreme weather events. Humans aren’t built to sense climate…our time frame is weather, and that’s what we respond to.

We’ve already seen two examples historically. Australia was the only developed country besides the US not to sign the Kyoto Protocol on carbon mitigation, since they sell lots of coal to China. Then Australia went through the worst drought in its history—coincidentally, just about the time that Al Gore’s Inconvenient Truth was released. The Australian electorate blamed climate change for the drought and elected a new government, one of whose first acts was to sign the Protocol and initiate climate change legislation.

We’ve already seen two examples historically. Australia was the only developed country besides the US not to sign the Kyoto Protocol on carbon mitigation, since they sell lots of coal to China. Then Australia went through the worst drought in its history—coincidentally, just about the time that Al Gore’s Inconvenient Truth was released. The Australian electorate blamed climate change for the drought and elected a new government, one of whose first acts was to sign the Protocol and initiate climate change legislation.

Something similar happened in Russia after their record-breaking drought several years ago. A decade earlier Vladimir Putin had said that Russia welcomed global warming—the wheat could grow longer and they’d need to buy fewer coats! Then came the drought, which devastated their wheat crop and spawned such enormous fires in the countryside that Moscow was choking on dense smoke. Soon thereafter Dmitry Medvedev announced that Russia needed to face the fact that climate change was a real threat.

Of course, humans being humans, once the extreme weather subsided (and the global economy tanked) both Australia and Russia grew less enthusiastic about carbon reduction. But the seed had been planted.

Now it’s the U.S.’s turn, and New York Mayor Bloomberg’s abrupt endorsement of Obama as the candidate best suited to tackle climate change is another example of extreme weather as sudden motivator.

Inevitably, Americans will lose interest in the climate change issue once the damage is repaired and we have a few months of normal weather. But now another prominent American, whose Wall Street loyalties insulate him from dismissal as just another liberal tree-hugger, is on the record about climate change, and another seed is planted.

I suspect it will take the rest of this decade, and a series of extreme weather events worldwide, to finally create a global awakening. In the US, for example, it might be a Category 4 hurricane hitting Miami, which my insurance clients say would almost certainly bankrupt the state of Florida. When private insurers began to shun Florida coastal property, Florida basically self-insured, and there’s not enough money in the state treasury to cover a major hit.

Someday, in short, there will be a number of extreme weather incidents , around the globe, in a relatively brief period of time. And that will finally catalyze the sophisticated social networks of the late Teens to create a worldwide movement demanding action on climate change.

It will be the Millennial generation, not the Boomers, who lead this movement. Climate change is not a Boomer issue—most Boomers will be happy if they’re still sitting on the porch in 2040, when the global impacts get truly dire. It will be the Millennials and their children whose futures are truly at stake. For them, companies and countries that continue to emit excessive carbon dioxide will be seen as international criminals. And only then will serious worldwide carbon reduction begin.

So, for the first time since I was 18, I am soon going to be without a car. That's no small emotional transition for a southern California native who grew up in a culture where if you hadn't been in the car for an hour, you hadn't gone anywhere. It was a world in which every boy in high school counted down the hours until you were old enough to take the driving test. Since back then it was age 16, you pretty much started counting down when you turned 12.

When I moved to New York City twelve years ago, I had my car shipped out from San Francisco. And even though the cost of garaging and insuring a vehicle in New York are ridiculous, I always felt that it was worth the price. I even started planning how to convince my apartment building to install a charging station for the plug-in hybrid I would buy next.

But no more. Two things changed my mind. The first is that traffic in New York City, never good, has gotten dramatically worse in the past decade. Driving, always difficult, is now just about impossible: there is almost no time that you can count on a trip without severe congestion somewhere along the line. And the second is that there are now four Zipcars in the basement parking garage of my building, available for hourly rental whenever I really must have a car.

Suddenly I'm far more sympathetic to the thesis that automobiles are becoming less interesting to the Millennial generation.

The open road is not exciting if you spend most of your time sitting in traffic, and it's going to get worse: the US is still the fastest growing industrialized nation on earth and we'll add 15 million more licensed drivers just in the next three years. Even the smallest towns I visit these days have rush hours and traffic back-ups.

Add to this the fact that more Millennials are moving back into city centers or close-in suburbs, where there is either mass transit or services like Zipcar.

Obviously, once you start a family, the car becomes more important, so it's not as if the automobile industry is going to collapse. But it will be a fundamental shift in the American psyche when the car--once a symbol of pleasure and freedom--becomes just another somewhat onerous duty of adulthood.

I often look ahead to the year 2020 for industries ranging from finance and media to transportation, energy and more. But last month it was an enterprise closer to my heart: the Professional Conference Management Association educational meeting in San Antonio, where I talked about “Imagining the Convention of 2020.”

Will virtual conferences and events supplant their real-world predecessors? The short answer is, of course, no. Consider last summer’s five year college reunions for the class of 2006—the first class to graduate with Facebook in full flower. Ever since 2006, alumni organizers have worried about this class: these graduates have been talking to each other regularly on social networks ever since they graduated. Would they still want to meet in person as well? The answer was yes: five year reunions for the class of 2006 were well-attended. Hugs and beers, in short, are still not effectively shared online.

However: virtual events will become a far larger part of the conference industry in the late Teens and Twenties. The shift will be driven by much better (and cheaper) video displays and ubiquitous high-bandwidth connectivity. Add to that a new generation of virtually-adept attendees—and the increasing cost, both economic and environmental, of conference travel. And crucially, conference sponsors—the folks who buy the booth space and sponsor those lunches and coffee breaks—will also start to move more of their marketing budgets into the virtual world. Thus the conference industry needs to think hard today about how to make money on virtual events.

However: virtual events will become a far larger part of the conference industry in the late Teens and Twenties. The shift will be driven by much better (and cheaper) video displays and ubiquitous high-bandwidth connectivity. Add to that a new generation of virtually-adept attendees—and the increasing cost, both economic and environmental, of conference travel. And crucially, conference sponsors—the folks who buy the booth space and sponsor those lunches and coffee breaks—will also start to move more of their marketing budgets into the virtual world. Thus the conference industry needs to think hard today about how to make money on virtual events.

Too many conference planners remind me of newspaper publishers a decade ago, for whom online was a sideshow that didn’t get the intense focus it deserved. Now, as print revenues plunge, newspaper publishers have far less time and money left to reinvent their businesses. The printed newspaper may still be the centerpiece, but the audience is spending far more time online. Ironically, traditional publishers are increasingly turning to real-world events to bolster their bottom lines. Event organizers need to do this in reverse: begin to think of events as content, and figure out how to “publish” them to larger audiences.

The first problem is that virtual conferences are today a Babel of various interfaces and technical standards. That’s not how it is in the physical world, where every conference venue is fundamentally familiar—whether in Hong Kong or New Orleans, attendees immediately recognize registration booths, hallways, meeting rooms, the convention floor. And at every convention center the exhibitors’ trucks deliver standardized booths onto the loading docks, which then set up, in a standard way, on the show floor. But virtual conferences vary wildly in look, navigation and function, confusing to attendees and frustrating for exhibitors who have no interest in building a different digital “booth” for every show that comes along.

More of my thoughts are in this interview in PCMA’s magazine, Convene. But it’s worth noting that for me the best part of the PCMA speech was after I was finished: the people I met the rest of that day in San Antonio. That’s an experience we can’t yet entirely duplicate in the virtual world—but we will grow much better at it in the years ahead. The conference organizers who get it right will literally reinvent the meaning of event.